The Case of the Disappearing Data

Jesse Dunietz is a Ph.D. student in computer science at Carnegie Mellon University and the founding president of the Public Communication for Researchers program.

The 1990s were a rough time. There was Crystal Pepsi. There was the Macarena. And let’s not even talk about Tickle Me Elmo. But one of the most frustrating things I can remember was the maddening sluggishness of the Internet. Any time I had to email a PowerPoint for school, I’d fire up the modem, wait for the beeps and whistles, start the upload, and go eat dinner, and maybe by the time I got back I’d have sent my single lousy email.

If I was really in a rush, though, I did have one trick up my sleeve: “zipping,” or compressing, the file. Programs like WinZip can grab an 80 MB PowerPoint presentation, chug away for a moment, and package it up into a ZIP file containing exactly the same PowerPoint file, now reduced to one-third the size.

When I first learned this trick, I thought nothing of it. The more I considered it, though, the more compressing files seemed like black magic. The file was smaller, but clearly no data was actually disappearing, because the recipient could still recreate the original. It was as though you could grab a 6-foot package, drop it into a 2-foot box for shipping, and then on the other end take out the original 6-foot package. Where did all the data go in the interim?

Sucking out the air

The package analogy hints at a possible answer. After all, it seems quite reasonable that you could shrink your package down if it contained something inflatable – say, a large exercise ball. Rather than shipping it as is, you could simply deflate the ball and stuff it into a smaller box, with instructions to re-inflate it on the other side. Unfortunately, this analogy only takes us so far: the ball is mostly air, which nobody minds losing, but I’d be very upset if WinZip started chopping out bits of the presentation I’d just spent two days creating. What’s the air that can be sucked out of a PowerPoint file?

To pull this off, computers use some of the same tricks that we humans rely on to process the world around us. For instance, consider a situation where a human has a lot of data to remember: learning a piece of music. Specifically, imagine that you’re the snare drummer for Ravel’s famous “Boléro”:

A few moments of listening to this piece should make it abundantly clear that there are a lot of drumbeats – 4,050, to be precise. That’s an awful lot of timing to remember. Your job gets much easier, though, the moment you realize that the snare drum part exhibits almost unbearable redundancy. Until the last few seconds, the entire part consists of a single sequence of 24 beats, repeated over and over. In psychological terms, there’s just one unit of information – one chunk – that you need to keep in mind. Instead of memorizing every note of the full piece, you can simply reduce the sequence to “chunk chunk chunk…”

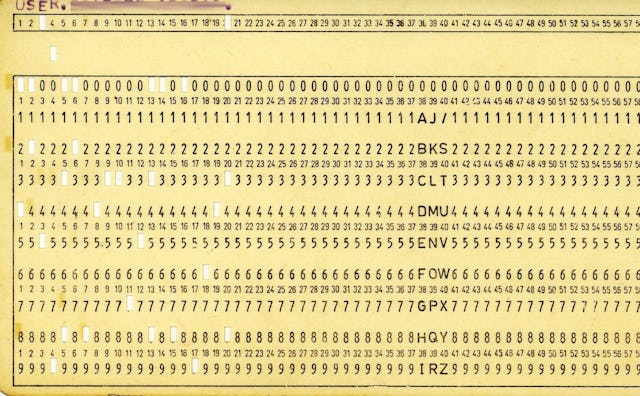

This is identical to the way your computer compresses a file. Like a musician seeking structure in a piece of music, a compression program looks for chunks that repeat throughout the file, and replaces them all with shorthands. For example, say my school presentation had contained the old tongue-twister, “How much wood could a woodchuck chuck if a woodchuck could chuck wood?” (I was a strange kid, okay?) The program would notice that “wood,” “could,” and “chuck” all repeat throughout the phrase, so it would replace each one with a chunk name – “X,” “Y,” and “Z,” for instance (see the diagram below). These redundant chunks are the air that gets sucked out of the document.

© Source: Jesse Dunietz

Of course, the receiving computer will still need to know what each of these shorthands means, so the compression program also saves a table that defines each shorthand – a symbol table (shown on the right in the diagram). This table is the equivalent of the instructions for re-inflating the ball: it tells the computer on the other end how to reconstruct the original document.

Redundancy explains the mystery of compression and suggests lots of ways beyond symbol tables to compress data further. Indeed, our habit of sending around huge media files, such as songs and videos, is only possible because of clever methods to eliminate even more redundancy. But there’s another mystery hiding in this explanation. If there’s so much redundancy to squeeze out, my original PowerPoint files seem unforgivably verbose. Why store an 80-megabyte file when 30 megabytes will do?

Of course, the designers of PowerPoint were perfectly well aware that they could compress the files, but size was not the only thing they had to worry about. Imagine if every time you wanted to use your exercise ball, you had to inflate it first and deflate it when you were done. That would be extremely space efficient, but not terribly convenient. We face the same tradeoff between space efficiency and convenience with our cognitive resources: you could work out how many cups are in a pint every time you cook, but at some point it becomes easier just to memorize it. Similarly, if your computer had to decompress a file each time it read it, every activity on the computer would start to feel like those 56K modem days. Leaving the redundancy in means more data, but also a lot less hassle.

For computers, just like for humans, redundancy is a tradeoff. Too little redundancy, and you’re stuck re-deriving the same information every two seconds. Too much redundancy, and that firehose of a Netflix video blows up your garden hose of an internet connection.

It’s a good thing we usually get that balance right – it’s only thanks to both redundancy and compression that I’m able to download a copy of Shawshank Redemption and play it smoothly off my laptop. Ohh, and Braveheart and The Matrix and Schindler’s List, too. Maybe the ’90s weren’t so bad after all.

flickr/bootload

This article was originally published on